Chapter 3 Discrete Random Variables

A statistical experiment produces an outcome in a sample space, but frequently we are more interested in a number that summarizes that outcome. For example, if we randomly select a person with a fever and provide them with a dosage of medicine, the sample space might be the set of all people who currently have a fever, or perhaps the set of all possible people who could currently have a fever. However, we are more interested in the summary value of “how much did the temperature of the patient decrease.” This is a random variable.

Let \(S\) be the sample space of an experiment. A random variable is a function from \(S\) to the real line. Random variables are usually denoted by a capital letter. Many times we will abbreviate the words random variable with rv.

Suppose \(X\) is a random variable. The events of interest for \(X\) are those that can be defined by a set of real numbers. For example, \(X = 2\) is the event consisting of all outcomes \(s \in S\) with \(X(s) = 2\). Similarly, \(X > 8\) is the event consisting of all outcomes \(s \in S\) with \(X(s) > 8\). In general, if \(U \subset \mathbb{R}\): \[ X \in U \text{ is the event } \{s \in S\ |\ X(s) \in U\} \]

Suppose that three coins are tossed. The sample space is \[ S = \{HHH, HHT, HTH, HTT, THH, THT, TTH, TTT\}, \] and all eight outcomes are equally likely, each occurring with probability 1/8. A natural random variable here is the number of heads observed, which we will call \(X\). As a function from \(S\) to the real numbers, \(X\) is given by:

\[\begin{align*} X(HHH) &= 3 \\ X(HHT) = X(HTH) = X(THH) &= 2 \\ X(TTH) = X(THT) = X(HTT) &= 1\\ X(TTT) &= 0 \end{align*}\]

The event \(X = 2\) is the set of outcomes \(\{HHT, HTH, THH\}\) and so: \[ P(X = 2) = P(\{HHT, HTH, THH\}) = \frac{3}{8}. \]

It is often easier, both notationally and for doing computations, to hide the sample space and focus only on the random variable. We will not always explicitly define the sample space of an experiment. It is easier, more intuitive and (for the purposes of this book) equivalent to just understand \(P(a < X < b)\) for all choices of \(a < b\). By understanding these probabilities, we can derive many useful properties of the random variable, and hence, the underlying experiment.

We will consider two types of random variables in this book. Discrete random variables are integers, and often come from counting something. Continuous random variables take values in an interval of real numbers, and often come from measuring something. Working with discrete random variables requires summation, while continuous random variables require integration. We will discuss these two types of random variables separately in this chapter and in Chapter 4.

3.1 Probability mass functions

A discrete random variable is a random variable that takes integer values.14 A discrete random variable is characterized by its probability mass function (pmf). The pmf \(p\) of a random variable \(X\) is given by \[ p(x) = P(X = x). \]

The pmf may be given in table form or as an equation. Knowing the probability mass function determines the discrete random variable, and we will understand the random variable by understanding its pmf.

Probability mass functions satisfy the following properties:

Let \(p\) be the probability mass function of \(X\).

- \(p(x) \ge 0\) for all \(x\).

- \(\sum_x p(x) = 1\).

To check that a function is a pmf, we check that all of its values are probabilities, and that those values sum to one.

The Eurasian lynx is a wild cat that lives in the north of Europe and Asia. When a female lynx gives birth, she may have from 1 to 4 kittens. Our statistical experiment is a lynx giving birth, and the outcome is a litter of baby lynx. Baby lynx are complicated objects, but there is a simple random variable here: the number of kittens. Call this \(X\). Ecologists have estimated15 the pmf for \(X\) to be:

\[ \begin{array}{l|cccc} x & 1 & 2 & 3 & 4 \\ \hline p(x) & 0.18 & 0.51 & 0.27 & 0.04 \end{array} \]

In other words, the probability that a lynx mother has one kitten is 0.18, the probability that she has two kittens is 0.51, and so on. Observe that \(p(1) + p(2) + p(3) + p(4) = 1\), so this is a pmf.

We can use the pmf to calculate the probability of any event defined in terms of \(X\). The probability that a lynx litter has more than one kitten is: \[ P( X > 1 ) = P(X=2) + P(X=3) + P(X=4) = 0.51 + 0.27 + 0.04 = 0.82. \]

Figure 3.1: Eurasian lynx kitten. (Photo credit: Bernard Landgraf)

Bernard Landgraf. This file is licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license. https://commons.wikimedia.org/wiki/File:Lynx_kitten.jpg

We can simulate the lynx litter size random variable \(X\) without having to capture pregnant lynx.

Recall that the R function sample has four arguments: the possible outcomes x, the sample size size to sample,

whether we are sampling with replacement replace, and the probability prob associated with the possible outcomes.

Here we generate values of \(X\) for 30 lynx litters:

litterpmf <- c(0.18, 0.51, 0.27, 0.04)

sample(1:4, 30, replace = TRUE, prob = litterpmf)## [1] 1 2 2 1 2 1 1 2 3 1 3 1 1 3 3 2 1 1 3 2 2 2 3 3 3 1 2 2 3 3With enough samples of \(X\), we can approximate the probability \(P(X > 1)\) as follows:

X <- sample(1:4, 10000, replace = TRUE, prob = litterpmf)

mean(X > 1)## [1] 0.8222In this code, the first line simulates the random variable. The code X > 1 produces a vector of TRUE and FALSE which is TRUE when the event \(X > 1\) occurs. Recall that taking the mean of a TRUE/FALSE vector gives the proportion of times that vector is TRUE, which will be approximately \(P(X > 1)\) here.

We can also recreate the pmf by using table to count values and then dividing by the sample size16:

table(X) / 10000## X

## 1 2 3 4

## 0.1778 0.5134 0.2674 0.0414Let \(X\) denote the number of heads observed when a coin is tossed three times.

In this example, we can simulate the random variable by first simulating experiment outcomes and then calculating \(X\) from those. The following generates three coin flips:

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)Now we calculate how many heads were flipped, and produce one value of \(X\).

sum(coin_toss == "H")## [1] 2Finally, we can use replicate to produce many samples of \(X\):

X <- replicate(10000, {

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)

sum(coin_toss == "H")

})

head(X, 30) # see the first 30 values of X## [1] 1 0 2 2 2 2 0 1 0 2 2 1 0 1 1 2 2 1 0 2 0 3 3 1 2 0 1 0 1 3From the simulation, we can estimate the pmf using table and dividing by the number of samples:

table(X) / 10000## X

## 0 1 2 3

## 0.1279 0.3756 0.3743 0.1222Instead of simulation, we could also calculate the pmf by considering the sample space, which consists of the eight equally likely outcomes HHH, HHT, HTH, HTT, THH, THT, TTH, TTT. Counting heads, we find that \(X\) has the pmf:

\[ \begin{array}{l|cccc} x & 0 & 1 & 2 & 3 \\ \hline p(x) & \frac{1}{8} & \frac{3}{8} & \frac{3}{8} & \frac{1}{8} \end{array} \]

which matches the results of our simulation. Here is an alternative description of \(p\) as a formula: \[ p(x) = {\binom{3}{x}} \left(\frac{1}{2}\right)^x \qquad x = 0,\ldots,3 \]

We always assume that \(p\) is zero for values not mentioned; both in the table version and in the formula version.

As in the lynx example, we may simulate this random variable directly by sampling with probabilities given by the pmf. Here we sample 30 values of \(X\) without “flipping” any “coins”:

sample(0:3, 30, replace = TRUE, prob = c(0.125, 0.375, 0.375, 0.125))## [1] 3 1 3 0 3 1 1 2 3 0 1 2 0 0 0 2 2 1 1 3 3 1 0 2 1 1 1 1 3 1Compute the probability that we observe at least one head when three coins are tossed.

Let \(X\) be the number of heads. We want to compute the probability of the event \(X \ge 1\). Using the pmf for \(X\),

\[\begin{align*} P(1 \le X) &= P(1 \le X \le 3)\\ &=P(X = 1) + P(X = 2) + P(X = 3)\\ &= \frac{3}{8}+ \frac{3}{8} +\frac{1}{8}\\ &= \frac{7}{8} = 0.875. \end{align*}\]

We could also estimate \(P(X \ge 1)\) by simulation:

X <- replicate(10000, {

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)

sum(coin_toss == "H")

})

mean(X >= 1)## [1] 0.8741Suppose you toss a coin until the first time you see heads. Let \(X\) denote the number of tails that you see. We will see later that the pmf of \(X\) is given by \[ p(x) = \left(\frac{1}{2}\right)^{x + 1} \qquad x = 0, 1, 2, 3, \ldots \] Compute \(P(X = 2)\), \(P(X \le 1)\), \(P(X > 1)\), and the conditional probability \(P(X = 2 | X > 1)\).

- To compute \(P(X = 2)\), we just plug in \(P(X = 2) = p(2) = \left(\frac{1}{2}\right)^3 = \frac{1}{8}\).

- To compute \(P(X \le 1)\), we add \(P(X = 0) + P(X = 1) = p(0) + p(1) = \frac{1}{2} + \frac{1}{4} = \frac{3}{4}\).

- The complement of \(X > 1\) is \(X \le 1\), so \[ P(X > 1) = 1 - P(X \le 1) = 1 - \frac{3}{4} = \frac{1}{4}. \] Alternatively, we could compute an infinite sum using the formula for the geometric series: \[ P(X > 1) = p(2) + p(3) + p(4) + \dotsb = \frac{1}{8} + \frac{1}{16} + \frac{1}{32} + \dotsb = \frac{1}{4}. \]

- The formula for conditional probability gives: \[ P(X = 2 | X > 1) = \frac{P(X = 2 \cap X > 1)}{P(X > 1)} = \frac{P(X = 2)}{P(X > 1)} = \frac{1/8}{1/4} = \frac{1}{2}.\] This last answer makes sense because \(X > 1\) requires the first two flips to be tails, and then there is a \(\frac{1}{2}\) chance your third flip will be heads and achieve \(X = 2\).

3.2 Expected value

Suppose you perform a statistical experiment repeatedly, and observe the value of a random variable \(X\) each time. The average of these observations will (under most circumstances) converge to a fixed value as the number of observations becomes large. This value is the expected value of \(X\), written \(E[X]\). The definition looks different than this, and our intuitive explanation of the expected value is actually Theorem 3.2.

For a discrete random variable \(X\) with pmf \(p\), the expected value of \(X\) is \[ E[X] = \sum_x x p(x), \] provided this sum exists, where the sum is taken over all possible values of the random variable \(X\).

Another word for the expected value of \(X\) is the mean of \(X\).

The mean of \(n\) observations of a random variable \(X\) converges to the expected value \(E[X]\) as \(n \to \infty\), assuming \(E[X]\) is defined.

Using simulation, we determine the expected value of a die roll. Here are 30 observations and their average:

rolls <- sample(1:6, 30, replace = TRUE)

rolls## [1] 3 3 3 3 1 5 1 2 2 3 3 6 5 1 1 6 6 5 2 6 5 2 5 2 6 1 3 6 6 5mean(rolls)## [1] 3.6The mean appears to be somewhere between 3 and 4. Using more trials gives more accuracy:

rolls <- sample(1:6, 100000, replace = TRUE)

mean(rolls)## [1] 3.4934Not surprisingly, the mean value is balanced halfway between 1 and 6, at 3.5.

Using the probability distribution of a random variable \(X\), one can compute the expected value \(E[X]\) exactly, as in the following example.

Let \(X\) be the value of a six-sided die roll. Since the probability of each outcome is \(\frac{1}{6}\), we have: \[ E[X] = 1\cdot\frac{1}{6} + 2\cdot\frac{1}{6} +3\cdot\frac{1}{6} +4\cdot\frac{1}{6} +5\cdot\frac{1}{6} +6\cdot\frac{1}{6} = \frac{21}{6} = 3.5 \]

Let \(X\) be the number of kittens in a Eurasian lynx litter. Then \[ E[X] = 1\cdot p(1) + 2 \cdot p(2) + 3 \cdot p(3) + 4 \cdot p(4) = 0.18 + 2\cdot 0.51 + 3\cdot0.27 + 4\cdot0.04 = 2.17 \] This means that, on average, the Eurasian lynx has 2.17 kittens.

We can perform the computation of \(E[X]\) using R:

litterpmf <- c(0.18, 0.51, 0.27, 0.04)

sum((1:4) * litterpmf)## [1] 2.17Alternatively, we may estimate \(E[X]\) by simulation, using the Law of Large Numbers.

X <- sample(1:4, 10000, replace = TRUE, prob = litterpmf)

mean(X)## [1] 2.1645The expected value need not be a possible outcome associated with the random variable. You will never roll a 3.5, and a lynx mother will never have 2.17 kittens. The expected value describes the average of many observations.

Let \(X\) denote the number of heads observed when three coins are tossed. The pmf of \(X\) is given by \(p(x) = {\binom{3}{x}} (1/2)^x\), where \(x = 0,\ldots,3\). The expected value of \(X\) is

\[ E[X] = 0 \cdot \frac{1}{8} + 1 \cdot \frac{3}{8} + 2 \cdot \frac{3}{8} + 3 \cdot \frac{1}{8} = \frac{3}{2} . \]

We can check this with simulation:

X <- replicate(10000, {

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)

sum(coin_toss == "H")

})

mean(X)## [1] 1.4932The answer is approximately 1.5, which is what our exact computation of \(E[X]\) predicted.

Consider the random variable \(X\) which counts the number of tails observed before the first head when a fair coin is repeatedly tossed. The pmf of \(X\) is \(p(x) = 0.5^{x + 1}\) for \(x = 0, 1, 2, \ldots\). Finding the expected value requires summing an infinite series, which we leave as an exercise. Instead, we estimate the infinite sum via a finite sum, and we use simulation.

We assume (see below for a justification) that the infrequent results of \(x \ge 100\) do not impact the expected value much. That is, we assume that \(\sum_{x = 0}^{99} x p(x) \approx E[X]\). We can use R to compute this:

xs <- 0:99

sum(xs * (0.5)^(xs + 1))## [1] 1In order to estimate the sum via simulation, we take a sample of size 10,000 and follow the same steps as in the previous example, again assuming that values of 100 or larger do not affect the expected value.

xs <- 0:99

probs <- .5^xs

X <- sample(0:99, 10000, replace = TRUE, prob = probs)

mean(X)## [1] 1.0172We estimate that the expected value \(E[X] \approx 1\).

To justify that we do not need to include values of \(x\) bigger than 99, note that

\[\begin{align*} E[X] - \sum_{x = 0}^{99} x (1/2)^{x + 1} &= \sum_{x=100}^\infty x (1/2)^{x + 1} \\ &< \sum_{x=100}^\infty 2^{x/2 - 1} (1/2)^{x + 1}\\ &= \sum_{x = 100}^\infty 2^{-x/2}\\ &= 2^{-50} \frac{1}{2^{49}(2 - \sqrt{2})} < 10^{-14} \end{align*}\]

So, truncating the sum at \(x = 99\) introduces a negligible error. The Loops in R vignette at the end of this chapter shows an approach using loops that avoids truncation.

We end this short section with an example of a discrete random variable that has expected value of \(\infty\). Let \(X\) be a random variable such that \(P(X = 2^x) = 2^{-x}\) for \(x = 1,2,\ldots\). We see that \(\sum_{x=1}^\infty x p(x) = \sum_{x=1}^\infty 2^x 2^{-x} = \infty\). If we truncated the sum associated with \(E[X]\) for this random variable at any finite point, then we would introduce a very large error!

3.3 Binomial and geometric random variables

The binomial and geometric random variables are common and useful models for many real situations. Both involve Bernoulli trials, named after the 17th century Swiss mathematician Jacob Bernoulli.

A Bernoulli trial is an experiment that can result in two outcomes, which we will denote as “success” and “failure.” The probability of a success will be denoted \(p\), and the probability of failure is therefore \(1-p\).

The following are examples of Bernoulli trials, at least approximately.

Toss a coin. Arbitrarily define heads to be success. Then \(p = 0.5\).

Shoot a free throw, in basketball. Success would naturally be making the shot, failure missing the shot. Here \(p\) varies depending on who is shooting. An excellent basketball player might have \(p = 0.8\).

Ask a randomly selected voter whether they support a ballot proposition. Here success would be a yes vote, failure a no vote, and \(p\) is likely unknown but of interest to the person doing the polling.

Roll a die, and consider success to be rolling a six. Then \(p = 1/6\).

A Bernoulli process is a sequence (finite or infinite) of repeated, identical, independent Bernoulli trials. Repeatedly tossing a coin is a Bernoulli process. Repeatedly trying to roll a six is a Bernoulli process. Is repeated free throw shooting a Bernoulli process? There are two reasons that it might not be. First, if the person shooting is a beginner, then they would presumably get better with practice. That would mean that the probability of a success is not the same for each trial (especially if the number of trials is large), and the process would not be Bernoulli. Second, there is the more subtle question of whether free throws are independent. Does a free throw shooter get “hot” and become more likely to make the shot following a success? There is no way to know for sure, although research17 suggests that repeated free throws are independent and that modeling free throws of experienced basketball players with a Bernoulli process is reasonable.

This section discusses two discrete random variables coming from a Bernoulli process: the binomial random variable which counts the number of successes in a fixed number of trials, and the geometric random variable, which counts the number of trials before the first success.

3.3.1 Binomial

A random variable \(X\) is said to be a binomial random variable with parameters \(n\) and \(p\) if \[ P(X = x) = {\binom{n}{x}} p^x (1 - p)^{n - x}\qquad x = 0, 1, \ldots, n. \] We will sometimes write \(X \sim \text{Binom}(n,p)\)

The most important example of a binomial random variable comes from counting the number of successes in a Bernoulli process of length \(n\). In other words, if \(X\) counts the number of successes in \(n\) independent and identically distributed Bernoulli trials, each with probability of success \(p\), then \(X \sim \text{Binom}(n, p)\). Indeed, many texts define binomial random variables in this manner, and we will use this alternative definition of a binomial random variable whenever it is convenient for us to do so.

One way to obtain a Bernoulli process is to sample with replacement from a population. If an urn contains 5 white balls and 5 red balls, and you count drawing a red ball as a “success,” then repeatedly drawing balls will be a Bernoulli process as long as you replace the ball back in the urn after you draw it. When sampling from a large population, we can sample without replacement and the resulting count of successes will be approximately binomial.

Let \(X\) denote the number of heads observed when three coins are tossed. Then \(X \sim \text{Binom}(3,0.5)\) is a binomial random variable. Here \(n = 3\) because there are three independent Bernoulli trials, and \(p = 0.5\) because each coin has probability \(0.5\) of heads.

If \(X\) counts the number of successes in \(n\) independent and identically distributed Bernoulli trials, each with probability of success \(p\), then \(X \sim \text{Binom}(n, p)\).

There are \(n\) trials. There are \(\binom{n}{x}\) ways to choose \(x\) of these \(n\) trials as the successful trials. For each of these ways, \(p^x(1-p)^{n-x}\) is the probability of having \(x\) successes in the chosen spots and \(n-x\) failures in the not chosen spots.

The binomial theorem says that: \[ (a + b)^n = a^n + {\binom{n}{1}}a^{n-1}b^1 + {\binom{n}{2}}a^{n-2}b^2 + \dotsb + {\binom{n}{n-1}}a^1 b^{n-1} + b^n \] Substituting \(a = p\) and \(b = 1-p\), the left-hand side becomes \((p + 1-p)^n = 1^n = 1\), and so: \[\begin{align*} 1 &= p^n + {\binom{n}{1}}p^{n-1}(1-p)^1 + {\binom{n}{2}}p^{n-2}(1-p)^2 + \dotsb + {\binom{n}{n-1}}p^1 (1-p)^{n-1} + (1-p)^n\\ &= P(X = n) + P(X = n-1) + P(X = n-2) + \dotsb + P(X = 1) + P(X = 0), \end{align*}\] which shows that the pmf for the binomial distribution does sum to 1, as it must.

In R, the function dbinom provides the pmf of the binomial distribution:

dbinom(x, size = n, prob = p) gives \(P(X = x)\) for \(X \sim \text{Binom}(n,p)\).

If we provide a vector of values for x, and a single value of size and prob, then dbinom will compute \(P(X = x)\) for all of the values in x.

The d suggests “distribution,” and the root binom identifies the binomial distribution. R uses this combination of prefix letter and root word to

specify many functions for working with random variables.

A useful idiom for working with discrete probabilities is sum(dbinom(vec, size, prob)). If vec contains distinct values, the sum computes the probability that \(X\) is in the set described by vec.

For \(X\) the number of heads when three coins are tossed, the pmf is

\[ P(X = x) = \begin{cases} 1/8 & x = 0,3\\3/8 & x = 1,2 \end{cases} \]

Computing with R,

x <- 0:3

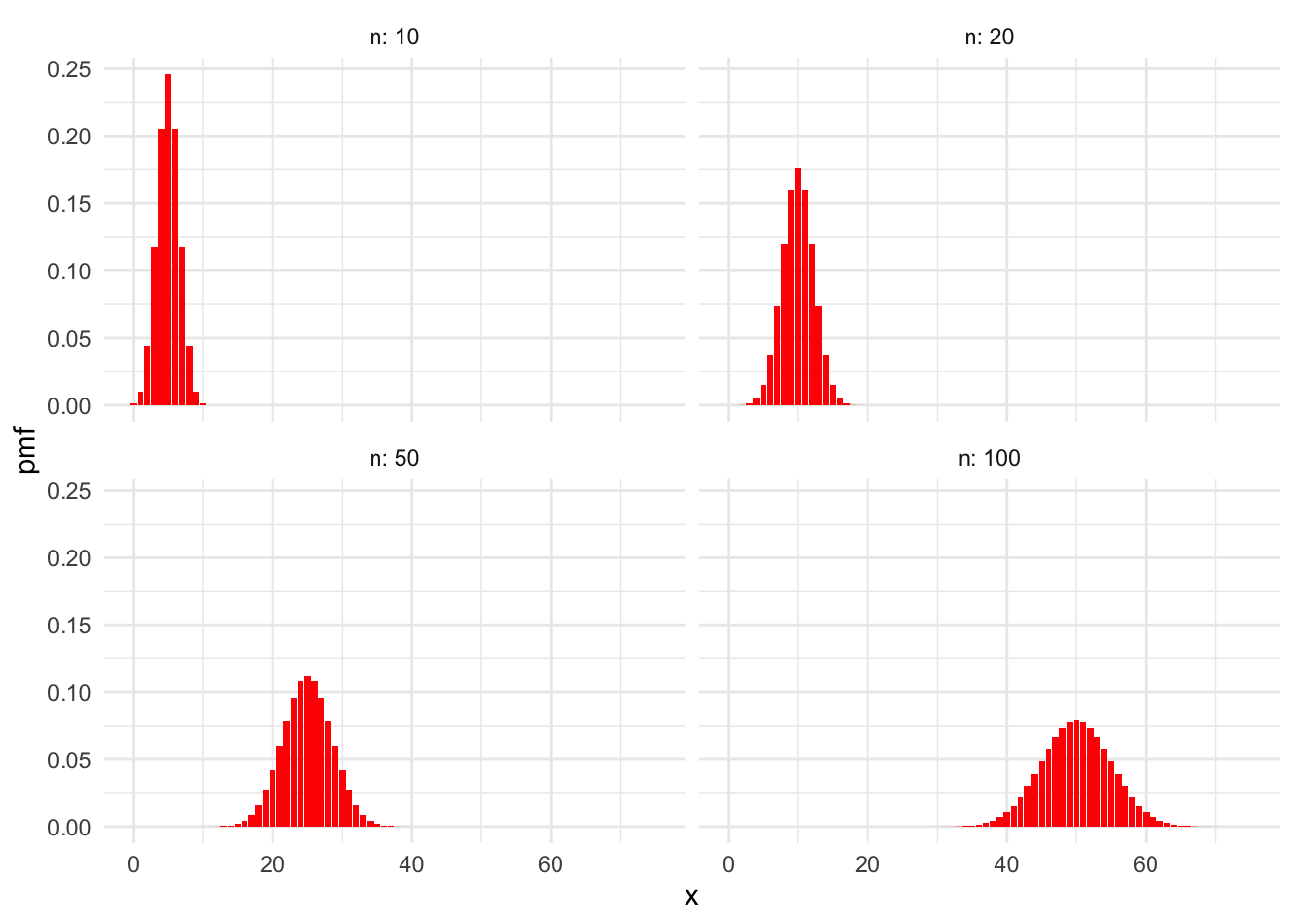

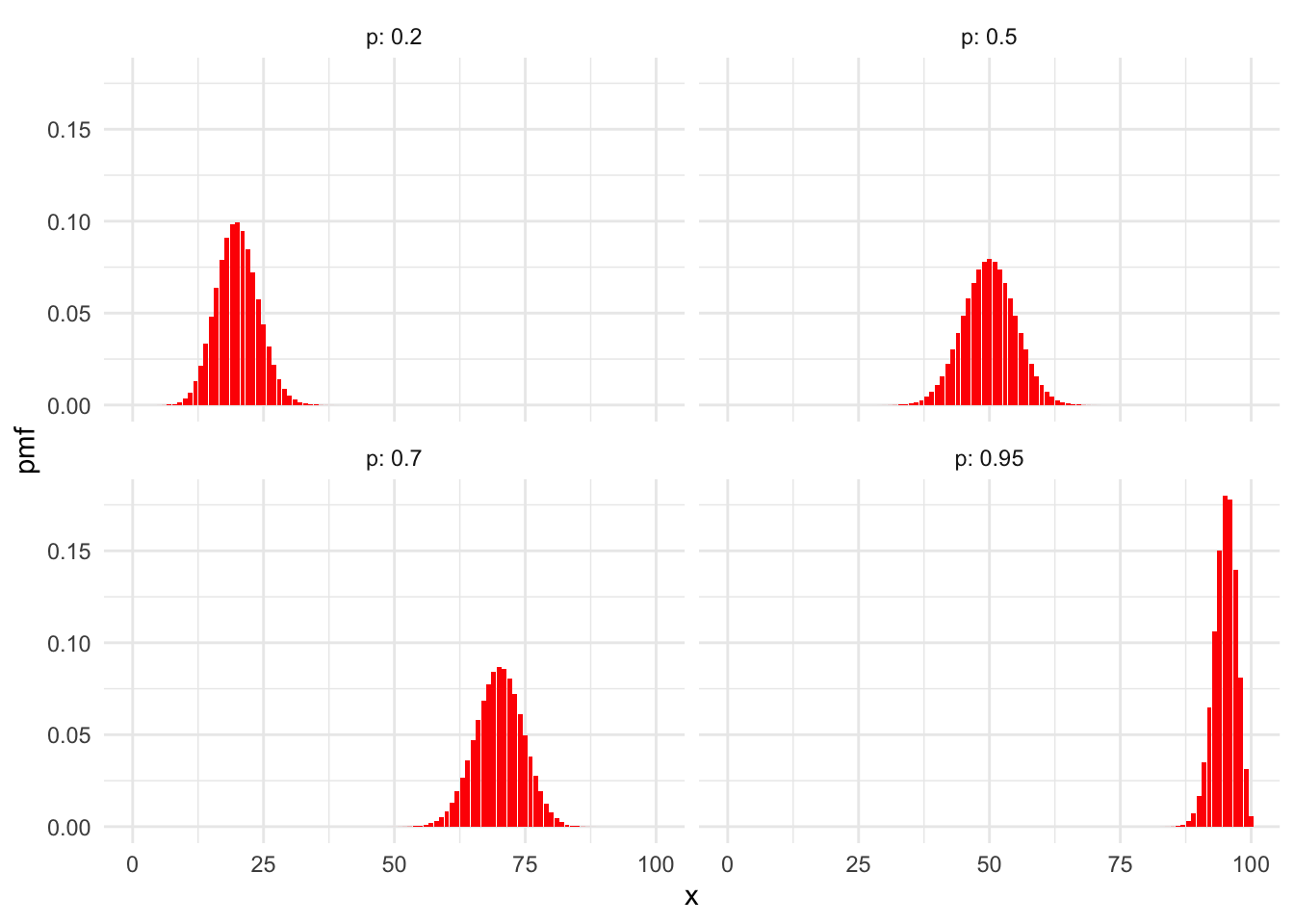

dbinom(x, size = 3, prob = 0.5)## [1] 0.125 0.375 0.375 0.125Figure 3.2 shows sample plots of the pmf of a binomial rv for various values of \(n\) when \(p= .5\), while Figure 3.3 shows the binomial pmf for \(n = 100\) and various \(p\). In these plots of binomial pmfs, the distributions are roughly balanced around a peak. The balancing point is the expected value of the random variable, which for binomial rvs is quite intuitive: it is simply \(np\).

Figure 3.2: Binomial distributions with \(p = 0.5\) and various values of \(n\).

Figure 3.3: Binomial distributions with \(n = 100\) and various values of \(p\).

Let \(X\) be a binomial random variable with \(n\) trials and probability of success \(p\). Then \[ E[X] = np \]

We will see a simple proof of this once we talk about the expected value of the sum of random variables. Here is a proof using the pmf and the definition of expected value directly.

The binomial theorem says that \[ (a + b)^n = a^n + {\binom{n}{1}}a^{n-1}b^1 + {\binom{n}{2}}a^{n-2}b^2 + \dotsb + {\binom{n}{n-1}}a^1 b^{n-1} + b^n. \] Take the derivative of both sides with respect to \(a\): \[ n(a + b)^{n-1} = na^{n-1} + (n-1){\binom{n}{1}}a^{n-2}b^1 + (n-2){\binom{n}{2}}a^{n-3}b^2 + \dotsb + 1{\binom{n}{n-1}}a^0 b^{n-1} + 0 \] and multiply both sides by \(a\): \[ an(a + b)^{n-1} = na^{n} + (n-1){\binom{n}{1}}a^{n-1}b^1 + (n-2){\binom{n}{2}}a^{n-2}b^2 + \dotsb + 1{\binom{n}{n-1}}a^1 b^{n-1} + 0.\] Finally, substitute \(a = p\), \(b = 1-p\), and note that \(a + b = 1\): \[ pn = np^{n} + (n-1){\binom{n}{1}}p^{n-1}(1-p)^1 + (n-2){\binom{n}{2}}p^{n-2}(1-p)^2 + \dotsb + 1{\binom{n}{n-1}}p^1 (1-p)^{n-1} + 0(1-p)^n \] or \[ pn = \sum_{x = 0}^{n} x {\binom{n}{x}}p^x(1-p)^{n-x} = E[X] \] since for \(X \sim \text{Binom}(n,p)\), the pmf is given by \(P(X = x) = {\binom{n}{x}}p^{x}(1-p)^{n-x}\)

Suppose 100 dice are thrown. What is the expected number of sixes? What is the probability of observing 10 or fewer sixes?

We assume that the results of the dice are independent and that the probability of rolling a six is \(p = 1/6\). The random variable \(X\) is the number of sixes observed, and \(X \sim \text{Binom}(100,1/6)\).

Then \(E[X] = 100 \cdot \frac{1}{6} \approx 16.67\). That is, we expect 1/6 of the 100 rolls to be a six.

The probability of observing 10 or fewer sixes is \[ P(X \le 10) = \sum_{x=0}^{10} P(X = x) = \sum_{x=0}^{10} {\binom{100}{x}}(1/6)^x(5/6)^{100-x} \approx 0.0427. \] In R,

sum(dbinom(0:10, 100, 1 / 6))## [1] 0.04269568R also provides the function pbinom, which is the cumulative sum of the pmf.

The cumulative sum of a pmf is important enough that it gets its own name: the cumulative distribution function.

We will say more about the cumulative distribution function in general in Section 4.1.

pbinom(x, size = n, prob = p) gives \(P(X \leq x)\) for \(X \sim \text{Binom}(n,p)\).

In the previous example, we could compute \(P(X \le 10)\) as

pbinom(10, 100, 1 / 6)## [1] 0.04269568Suppose Peyton and Riley are running for office, and 46% of all voters prefer Peyton. A poll randomly selects 300 voters from a large population and asks their preference. What is the expected number of voters who will report a preference for Peyton? What is the probability that the poll results suggest Peyton will win?

Let “success” be a preference for Peyton, and \(X\) be the random variable equal to the number of polled voters who prefer Peyton. It is reasonable to assume that \(X \sim \text{Binom}(300,0.46)\) as long as our sample of 300 voters is a small portion of the population of all voters.

We expect that \(0.46 \cdot 300 = 138\) of the 300 voters will report a preference for Peyton.

For the poll results to show Peyton in the lead, we need \(X > 150\). To compute \(P(X > 150)\), we use \(P(X > 150) = 1 - P(X \leq 150)\) and then

1 - pbinom(150, 300, 0.46)## [1] 0.07398045There is about a 7.4% chance the poll will show Peyton in the lead, despite their imminent defeat.

R provides the function rbinom to simulate binomial random variables.

The first argument to rbinom is the number of random values to simulate, and the next arguments are size = n and prob = p. Here are 15 simulations of the Peyton vs. Riley poll:

rbinom(15, size = 300, prob = 0.46)## [1] 132 116 129 139 165 137 138 142 134 140 140 134 134 126 149In this series of simulated polls, Peyton appears to be losing in all except the fifth poll where she was preferred by \(165/300 = 55\%\) of the selected voters.

We can compute \(P(X > 150)\) by simulation

X <- rbinom(10000, 300, 0.46)

mean(X > 150)## [1] 0.0714which is close to our theoretical result that Peyton should appear to be winning 7.4% of the time.

Finally, we can estimate the expected number of people in our poll who say they will vote for Peyton and compare that to the value of 138 that we calculated above.

mean(X) # estimate of expected value## [1] 137.9337As a final note, all of the simulations of random variables in R provide random samples from the specified distributions. That means that the outcomes of trials do not influence the outcomes of the other trials. See Definition 3.34 for a precise definition.

3.3.2 Geometric

A random variable \(X\) is said to be a geometric random variable with parameter \(p\) if \[ P(X = x) = (1 - p)^{x} p, \qquad x = 0, 1,2, \ldots \]

Let \(X\) be the random variable that counts the number of failures before the first success in a Bernoulli process with probability of success \(p\). Then \(X\) is a geometric random variable.

The only way to achieve \(X = x\) is to have the first \(x\) trials result in failure and the next trial result in success. Each failure happens with probability \(1-p\), and the final success happens with probability \(p\). Since the trials are independent, we multiply \(1-p\) a total of \(x\) times, and then multiply by \(p\).

As a check, we show that the geometric pmf does sum to one. This requires summing an infinite geometric series: \[ \sum_{x=0}^{\infty} p(1-p)^x = p \sum_{x=0}^\infty (1-p)^x = p \frac{1}{1-(1-p)} = 1 \]

We defined the geometric random variable \(X\) so that it is compatible with functions built into R. Some sources let \(Y\) be the number of trials required for the first success, and call \(Y\) geometric. In that case \(Y = X + 1\), as the final success counts as one additional trial.

The functions dgeom, pgeom, and rgeom are available for working with a geometric random variable \(X \sim \text{Geom}(p)\):

dgeom(x,p)is the pmf and gives \(P(X = x)\).pgeom(x,p)gives \(P(X \leq x)\).rgeom(N,p)simulates \(N\) random values of \(X\).

A die is tossed until the first six occurs. What is the probability that it takes 4 or more tosses?

We define success as a roll of six, and let \(X\) be the number of failures before the first success. Then \(X \sim \text{Geom}(1/6)\), a geometric random variable with probability of success \(1/6\).

Taking 4 or more tosses corresponds to the event \(X \geq 3\). Theoretically,

\[

P(X \ge 3) = \sum_{x=3}^\infty P(X = x) = \sum_{x=3}^\infty \frac{1}{6}\cdot\left(\frac{5}{6}\right)^x = \frac{125}{216} \approx 0.58.

\]

We cannot perform the infinite sum with dgeom, but we can come close by summing to a large value of \(x\):

sum(dgeom(3:1000, 1 / 6))## [1] 0.5787037Another approach is to apply rules of probability to see that \(P(X \ge 3) = 1 - P(X < 3)\). Since \(X\) is discrete, \(X < 3\) and \(X \leq 2\) are the same event. Then \(P(X \ge 3) = 1 - P(X \le 2)\):

1 - sum(dgeom(0:2, 1 / 6))## [1] 0.5787037Rather than summing the pmf, we may use pgeom:

1 - pgeom(2, 1 / 6)## [1] 0.5787037The function pgeom has an option lower.tail=FALSE which makes it compute \(P(X > x)\) rather than \(P(X \le x)\), leading to maybe the most concise method:

pgeom(2, 1 / 6, lower.tail = FALSE)## [1] 0.5787037Finally, we can use simulation to approximate the result:

X <- rgeom(10000, 1 / 6)

mean(X >= 3)## [1] 0.581All of these show there is about a 0.58 probability that it will take four or more tosses to roll a six.

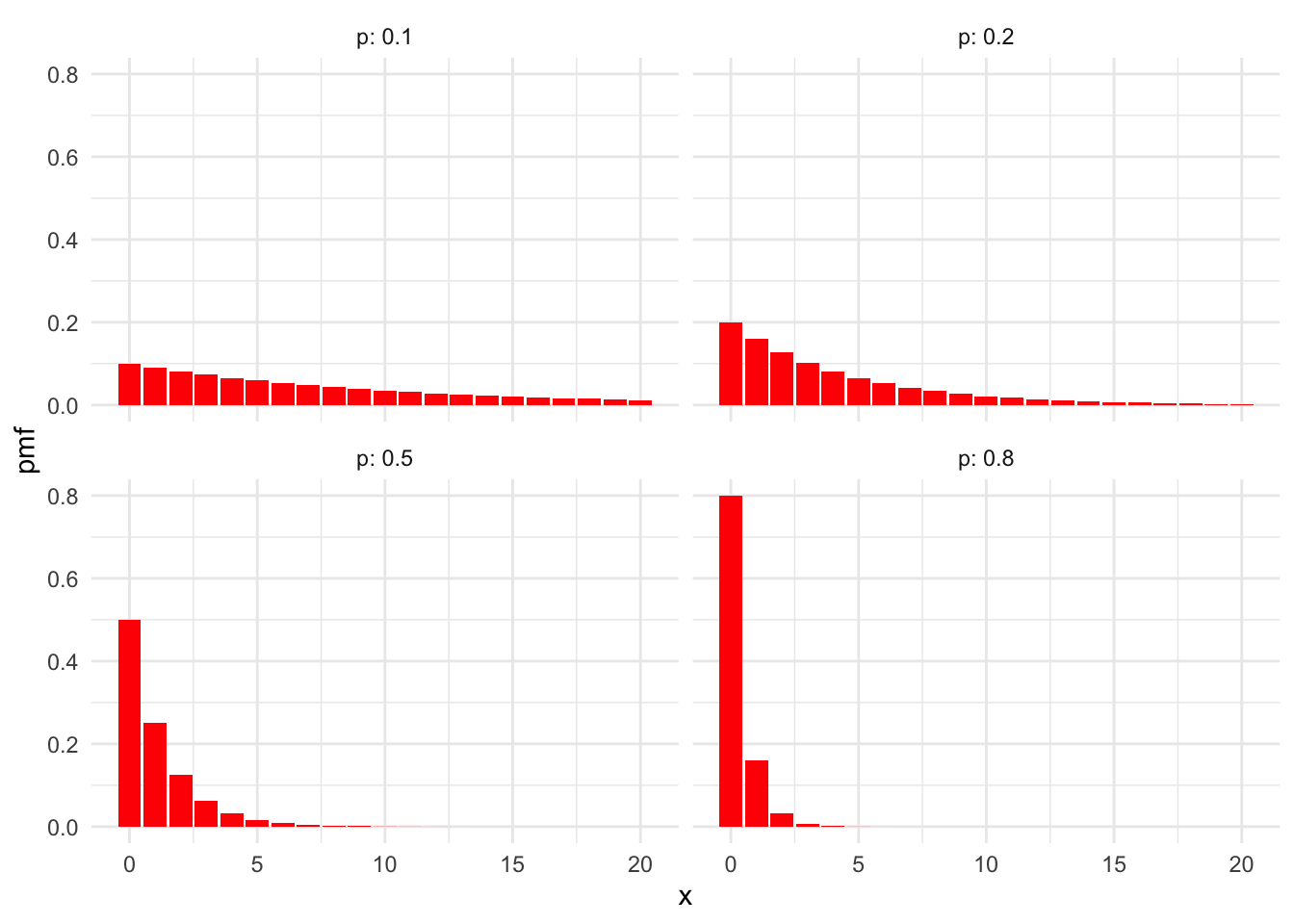

Figure 3.4 shows the probability mass functions for geometric random variables with various \(p\). Observe that for smaller \(p\), we see that \(X\) is likely to be larger. The lower the probability of success, the more failures we expect before our first success.

Figure 3.4: PMFs for geometric random variables with various values of \(p\).

Let \(X\) be a geometric random variable with probability of success \(p\). Then \[ E[X] = \frac{(1-p)}{p} \]

Let \(X\) be a geometric rv with success probability \(p\). Let \(q = 1-p\) be the failure probability. We must compute \(E[X] = \sum_{x = 0}^\infty x pq^x\). Begin with a geometric series in \(q\): \[ \sum_{x = 0}^\infty q^x = \frac{1}{1-q} \] Take the derivative of both sides with respect to \(q\): \[ \sum_{x = 0}^\infty xq^{x-1} = \frac{1}{(1-q)^2} \] Multiply both sides by \(pq\): \[ \sum_{x = 0}^\infty xpq^x = \frac{pq}{(1-q)^2} \] Replace \(1-q\) with \(p\) and we have shown: \[ E[X] = \frac{q}{p} = \frac{1-p}{p} \]

Roll a die until a six is tossed. What is the expected number of rolls?

The expected number of failures is given by \(X \sim \text{Geom}(1/6)\), and so we expect \(\frac{5/6}{1/6} = 5\) failures before the first success. Since the number of total rolls is one more than the number of failures, we expect 6 rolls, on average, to get a six.

Professional basketball player Steve Nash was a 90% free-throw shooter over his career. If Steve Nash starts shooting free throws, how many would he expect to make before missing one? What is the probability that he could make 20 in a row?

Let \(X\) be the random variable which counts the number of free throws Steve Nash makes before missing one. We model a Steve Nash free throw as a Bernoulli trial, but we choose “success” to be a missed free throw, so that \(p = 0.1\) and \(X \sim \text{Geom}(0.1)\). The expected number of “failures” is \(E[X] = \frac{0.9}{0.1} = 9\), which means we expect Steve to make 9 free throws before missing one.

To make 20 in a row requires \(X \ge 20\). Using \(P(X \ge 20) = 1 - P(X \le 19)\),

1 - pgeom(19, 0.1)## [1] 0.1215767we see that Steve Nash could run off 20 (or more) free throws in a row about 12% of the times he wants to try.

3.4 Functions of a random variable

Recall that a random variable \(X\) is a function from the sample space \(S\) to \(\mathbb{R}\). Given a function \(g : \mathbb{R} \to \mathbb{R},\) we can form the random variable \(g \circ X\), usually written \(g(X)\).

For example, the mean number of births per day in the United States is about 10300. Suppose we pick a day in February and observe \(X\) the number of births on that day. We might be more interested in the deviation from the mean \(X - \mu\) or the absolute deviation from the mean \(|X - \mu|\) of the measurement than we are in \(X\), the value of the measurement. The values \(X - \mu\) and \(|X - \mu|\) are examples of functions of a random variable.

As another example, if \(X\) is the value from a six-sided die roll and \(g(x) = x^2\), then \(g(X) = X^2\) is the value of a single six-sided die roll, squared. Here \(X^2\) can take the values \(1, 4, 9, 16, 25, 36\), all equally likely.

The probability mass function of \(g(X)\) can be computed as follows. For each \(y\), the probability that \(g(X) = y\) is given by \(\sum p(x)\), where the sum is over all values of \(x\) such that \(g(x) = y\).

Let \(X\) be the value when a six-sided die is rolled and let \(g(X) = X^2\). The probability mass function of \(g(X)\) is given by

\[ \begin{array}{l|cccccc} x & 1 & 4 & 9 & 16 & 25 & 36 \\ \hline p(x) & 1/6 & 1/6 & 1/6 & 1/6 & 1/6 & 1/6 \end{array} \]

This is because for each value of \(y\), there is exactly one \(x\) with positive probability for which \(g(x) = y\).

Let \(X\) be the value when a six-sided die is rolled and let \(g(X) = |X - 3.5|\) be the absolute deviation from the expected value.18 The probability that \(g(X) = .5\) is 2/6 because \(g(4) = g(3) = 0.5\), and \(p(4) + p(3) = 2/6\). The full probability mass function of \(g(X)\) is given by

\[ \begin{array}{l|ccc} x & 0.5 & 1.5 & 2.5 \\ \hline p(x) & 1/3 & 1/3 & 1/3 \end{array} \]

Note that we could then find the expected value of \(g(X)\) by applying the definition of expected value to the probability mass function of \(g(X)\). It is usually easier, however, to apply the following theorem.

Let \(X\) be a discrete random variable with probability mass function \(p\), and let \(g\) be a function. Then, \[ E\left[g(X)\right] = \sum g(x) p(x). \]

Let \(X\) be the value of a six-sided die roll. Then whether we use the pmf calculated in Example 3.26 or Theorem 3.7, we get that \[ E[X^2] = 1^2\cdot 1/6 + 2^2\cdot 1/6 + 3^2\cdot 1/6 + 4^2\cdot 1/6 + 5^2\cdot 1/6 + 6^2\cdot 1/6 = 91/6 \approx 15.2\] Computing with simulation is straightforward:

X <- sample(1:6, 10000, replace = TRUE)

mean(X^2)## [1] 15.2356Let \(X\) be the number of heads observed when a fair coin is tossed three times.

Compute the expected value of \((X-1.5)^2\).

Since \(X\) is a binomial random variable with \(n = 3\) and \(p = 1/2\), it has pmf \(p(x) = {\binom{3}{x}} (.5)^3\) for \(x = 0,1,2,3\).

We compute

\[

\begin{split}

E[(X-1.5)^2] &= \sum_{x=0}^3(x-1.5)^2p(x)\\

&= (0-1.5)^2\cdot 0.125 + (1-1.5)^2 \cdot 0.375 +

(2-1.5)^2 \cdot 0.375 + (3-1.5)^2 \cdot 0.125\\

&= 0.75

\end{split}

\]

Since dbinom gives the pdf for binomial rvs, we can perform this exact computation in R:

x <- 0:3

sum((x - 1.5)^2 * dbinom(x, 3, 0.5))## [1] 0.75We conclude this section with two simple but important observations about expected values. First, expected value is linear. Second, the expected value of a constant is that constant. Stated precisely:

For random variables \(X\) and \(Y\), and constants \(a\), \(b\), and \(c\):

- \(E[aX + bY] = aE[X] + bE[Y]\)

- \(E[c] = c\)

The proofs follow from the definition of expected value and the linearity of summation. With these theorems in hand, we can provide a much simpler proof for the formula of the expected value of a binomial random variable.

Let \(X \sim \text{Binom}(n, p)\). Show that \(E[X] = np\).

The variable \(X\) is the number of successes in \(n\) Bernoulli trials, each with probability \(p\). Let \(X_1, \ldots, X_n\) be independent Bernoulli random variables with probability of success \(p\). That is, \(P(X_i = 1) = p\) and \(P(X_i = 0) = 1 - p\). It follows that \(X = \sum_{i = 1}^n X_i\). Therefore,

\[\begin{align*} E[X] &= E\left[\sum_{i = 1}^n X_i\right]\\ &= \sum_{i = 1}^n E[X_i] = \sum_{i = 1}^n p = np. \end{align*}\]

3.5 Variance, standard deviation, and independence

The variance of a random variable measures the spread of the variable around its expected value. Random variables with large variance can be quite far from their expected values, while rvs with small variance stay near their expected value. The standard deviation is simply the square root of the variance. The standard deviation also measures spread, but in more natural units which match the units of the random variable itself.

Let \(X\) be a random variable with expected value \(\mu = E[X]\). The variance of \(X\) is defined as \[ \text{Var}(X) = E[(X - \mu)^2] \] The standard deviation of \(X\) is written \(\sigma(X)\) and is the square root of the variance: \[ \sigma(X) = \sqrt{\text{Var}(X)} \]

Note that the variance of an rv is always positive (in the French sense19), as it is the expected value of a positive function.

The next theorem gives a formula for the variance that is often easier than the definition when performing computations.

\({\rm Var}(X) = E[X^2] - E[X]^2\).

Applying linearity of expected values (Theorem 3.8) to the definition of variance yields: \[ \begin{split} E[(X - \mu)^2] &= E[X^2 - 2\mu X + \mu^2]\\ &= E[X^2] - 2\mu E[X] + \mu^2 = E[X^2] - 2\mu^2 + \mu^2\\ &= E[X^2] - \mu^2, \end{split} \] as desired.

Let \(X\) be the value of a single die roll. We know that \(\mu = E[X] = 7/2\) and Example 3.28 showed that \(E[X^2] = 91/6\). The variance of a die roll is \[ {\rm Var}(X) = 91/6 - (7/2)^2 = 35/12 \approx 2.92 \] and the standard deviation is \(\sigma(X) = \sqrt{35/12} \approx 1.71\).

Checking with a simulation,

X <- sample(1:6, 100000, replace=TRUE)

sd(X)## [1] 1.706927Let \(X \sim \text{Binom}(3,0.5)\). Compute the standard deviation and variance of \(X\).

Here \(\mu = E[X] = 1.5\).

In Example 3.29, we saw that

\(E[(X-1.5)^2] = 0.75\). Then \(\text{Var}(X) = 0.75\) and the standard deviation is

\(\sigma(X) = \sqrt{0.75} \approx 0.866\). We can check both of these using simulation and

the built-in R functions var and sd:

X <- rbinom(10000, 3, 0.5)

var(X)## [1] 0.7481625sd(X)## [1] 0.86496383.5.1 Independent random variables

We say that two random variables \(X\) and \(Y\) are independent if knowledge of the value of \(X\) does not give probabilistic information about the value of \(Y\) and vice versa. As an example, let \(X\) be the number of days it snows at St. Louis Lambert Airport in a given month, and let \(Y\) be the number of kittens in a Eurasian lynx litter. It is difficult to imagine that knowing the value of one of these random variables could give information about the other one, and it is reasonable to assume that \(X\) and \(Y\) are independent. On the other hand, if \(X\) and \(W\) are the snowy days at Lambert and the number of flights delayed that month, then knowledge of one variable could well give probabilistic information about the other. For example, if you know it snowed a lot in March, it is likely that more flights were delayed. So \(X\) and \(W\) are not independent.

The key result in this section is Theorem 3.10. We will usually be assuming that random variables are independent so that we may apply part 2 of the theorem, that the variance of a sum is the sum of variances. For readers less interested in theory, a sense of when real-world variables are independent is all that is required to follow the rest of this textbook. In Exercises 3.24, 3.27, and 4.13, you may verify Theorem 3.10, part 2 by simulation in some special cases.

We would like to formalize the notion of independence with conditional probability. The natural statement is that for any \(E, F\) subsets of \({\mathbb R}\), the conditional probability \(P(X\in E|Y\in F)\) is equal to \(P(X \in E)\). There are several issues with formalizing the notion of independence that way, so we give a definition that is somewhat further removed from the intuition.

A collection of rvs \(X_1, \dotsc, X_n\) are called independent \(\iff\) for all \(x_1, \dotsc, x_n\) the events \(X_1 \le x_1\), \(X_2 \le x_2\), \(\dotsc\), and \(X_n \le x_n\) are mutually independent.

For two random variables \(X\) and \(Y\), independence means that for all \(x\) and \(y\),

\[ P(X \le x \text{ and } Y \le y) = P(X \le x) \cdot P(Y \le y). \]

If \(X\) and \(Y\) are discrete, an equivalent statement is that for all \(x\) and \(y\),

\[ P(X = x \text{ and } Y = y) = P(X = x) \cdot P(Y = y) \]

Dividing both sides by \(P(Y=y)\), we see that two discrete independent random variables satisfy \(P(X = x | Y = y) = P(X = x)\), which returns to the intuition that “knowing something about \(Y\) tells you nothing about \(X\).”

Let \(X\) be a random variable and \(c\) a constant. Then \[ \begin{aligned} \text{Var}(cX) &= c^2\text{Var}(X)\\ \sigma(cX) &= |c| \sigma(X) \end{aligned} \]

Let \(X_1, X_2, \dotsc, X_n\) be independent random variables. Then \[ {\rm Var}(X_1 + X_2 + \dotsb + X_n) = {\rm Var}(X_1) + \dotsb + {\rm Var}(X_n) \]

For part 1, \[\begin{align*} {\rm Var}(cX) =& E[(cX)^2] - E[cX]^2 = c^2E[X^2] - (cE[X])^2\\ =&c^2\bigl(E[X^2] - E[X]^2) = c^2{\rm Var}(X) \end{align*}\]

For part 2, we will only treat the special case of two independent discrete random variables \(X\) and \(Y\). We need to show that \({\rm Var}(X + Y) = {\rm Var}(X) + {\rm Var}(Y)\).

\[\begin{align*} {\rm Var}(X + Y) &= E[(X + Y)^2] - E[X + Y]^2 \\ &= E[X^2 + 2XY + Y^2] - (E[X] + E[Y])^2\\ &= E[X^2] - E[X]^2 + E[Y^2] - E[Y]^2 + 2E[XY] - 2E[X]E[Y]\\ &= {\rm Var}(X) + {\rm Var}(Y) + 2\left(E[XY] - E[X]E[Y]\right) \end{align*}\]

The value \(E[XY] - E[X]E[Y]\) is called the covariance of \(X\) and \(Y\), written \({\text{Cov}}(X, Y)\). To finish, we need to show that the covariance is zero when \(X\) and \(Y\) are independent.

For any \(z\), the event \(XY = z\) is the disjoint union of events \((X = x) \cap (Y=y)\) where \(xy = z\). Summing all \(x\) and \(y\) with \(xy = z\) and then summing over \(z\) is the same as simply summing over all possible \(x\) and \(y\). So,

\[\begin{align*} E[XY] &= \sum_z z P(XY = z) = \sum_z \sum_{x,y \text{ with } xy=z} z P\bigl((X = x) \cap (Y = y)\bigr) \\ &= \sum_{x,y} xy P(X=x)\cdot P(Y =y) = \left(\sum_x x P(X = x)\right)\left(\sum_y y P(Y = y)\right) \\ &= E[X]E[Y]. \end{align*}\]

If \(X\) and \(Y\) are independent rvs, then \({\rm Var}(X - Y) = {\rm Var}(X) + {\rm Var}(Y)\).

Let \(X \sim \text{Binom}(n, p)\). We have seen that \(X = \sum_{i = 1}^n X_i\), where \(X_i\) are independent Bernoulli random variables. Therefore,

\[\begin{align*} {\text {Var}}(X) &= {\text {Var}}(\sum_{i = 1}^n X_i)\\ &= \sum_{i = 1}^n {\text {Var}}(X_i) \\ &= \sum_{i = 1}^n p(1 - p) = np(1-p) \end{align*}\] where we have used that the variance of a Bernoulli random variable is \(p(1- p)\). Indeed, \(E[X_i^2] -E[X_i]^2 = p - p^2 = p(1 - p)\).

3.6 Poisson, negative binomial, and hypergeometric

In this section, we discuss three other commonly occurring special types of discrete random variables.

3.6.1 Poisson

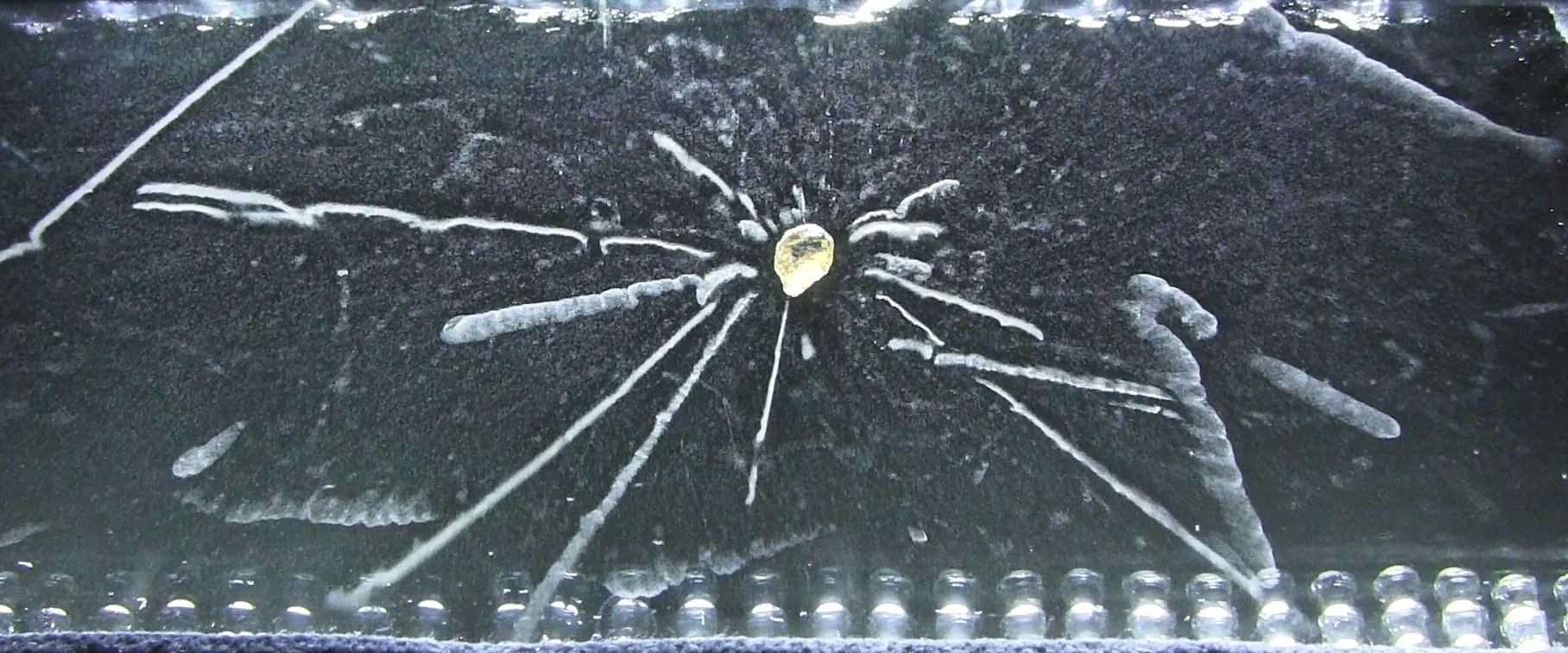

A Poisson process models events that happen at random times. For example, radioactive decay is a Poisson process, where each emission of a radioactive particle is an event. Other examples modeled by the Poisson process are meteor strikes on the surface of the moon, customers arriving at a store, hits on a web page, and car accidents on a given stretch of highway.

Figure 3.5: Radioactivity of a Thorite mineral seen in a cloud chamber. (Photo credit: Julien Simon)

Julien Simon. This file is licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license. https://commons.wikimedia.org/wiki/File:Radioactivity_of_a_Thorite_mineral_seen_in_a_cloud_chamber.jpg

In this section, we will discuss one natural random variable attached to a Poisson process: the Poisson random variable. We will see another, the exponential random variable, in Section 4.5.2. The Poisson random variable is discrete, and can be used to model the number of events that happen in a fixed time period. The exponential random variable models the time between events.

We begin by defining a Poisson process. Suppose events occur spread over time. The events form a Poisson process if they satisfy the following assumptions:

- The probability of an event occurring in a time interval \([a,b]\) depends only on the length of the interval \([a,b]\).

- If \([a,b]\) and \([c,d]\) are disjoint time intervals, then the probability that an event occurs in \([a,b]\) is independent of whether an event occurs in \([c,d]\). (That is, knowing that an event occurred in \([a,b]\) does not change the probability that an event occurs in \([c,d]\).)

- Two events cannot happen at the same time. (Formally, we need to say something about the probability that two or more events happens in the interval \([a, a + h]\) as \(h\to 0\).)

- The probability of an event occurring in a time interval \([a,a + h]\) is roughly \(\lambda h\), for some constant \(\lambda\).

Property 4 says that events occur at a certain rate, which is denoted by \(\lambda\).

The random variable \(X\) is said to be a Poisson random variable with rate \(\lambda\) if the pmf of \(X\) is given by \[ p(x) = e^{-\lambda} \frac{\lambda^x}{x!} \qquad x = 0, 1, 2, \ldots \] The parameter \(\lambda\) is required to be larger than 0. We write \(X \sim \text{Pois}(\lambda)\).

Let \(X\) be the random variable that counts the number of occurrences in a Poisson process with rate \(\lambda\) over one unit of time. Then \(X\) is a Poisson random variable with rate \(\lambda\).

We have not stated Assumption 3.1.4 precisely enough for a formal proof, so this is only a heuristic argument.

Suppose we break up the time interval from 0 to 1 into \(n\) pieces of equal length. Let \(X_i\) be the number of events that happen in the \(i\)th interval. When \(n\) is big, \((X_i)_{i = 1}^n\) is approximately a sequence of \(n\) Bernoulli trials, each with probability of success \(\lambda/n\). Therefore, if we let \(Y_n\) be a binomial random variable with \(n\) trials and probability of success \(\lambda/n\), we have:

\[\begin{align*} P(X = x) &= P\bigl(\sum_{i = 1}^n X_i = x\bigr)\\ &\approx P(Y_n = x)\\ &= {\binom{n}{x}} (\lambda/n)^x (1 - \lambda/n)^{(n - x)} \\ &\approx \frac{n^x}{x!} \frac{1}{n^x} \lambda^x (1 - \lambda/n)^n\\ &\to \frac{\lambda^x}{x!}{e^{-\lambda}} \qquad {\text {as}} \ n\to \infty \end{align*}\]

The key to defining the Poisson process formally correctly is to ensure that the above computation is mathematically justified.

We leave the proof that \(\sum_x p(x) = 1\) as Exercise 3.36, along with the proof of the following Theorem.

The mean and variance of a Poisson random variable are both \(\lambda\).

Though the proof of the mean and variance of a Poisson is an exercise, we can also give a heuristic argument. From above, a Poisson with rate \(\lambda\) is approximately a Binomial rv \(Y_n\) with \(n\) trials and probability of success \(\lambda/n\) when \(n\) is large. The mean of \(Y_n\) is \(n \times \lambda/n = \lambda\), and the variance is \(n (\lambda/n)(1 - \lambda/n) \to \lambda\) as \(n\to\infty\).

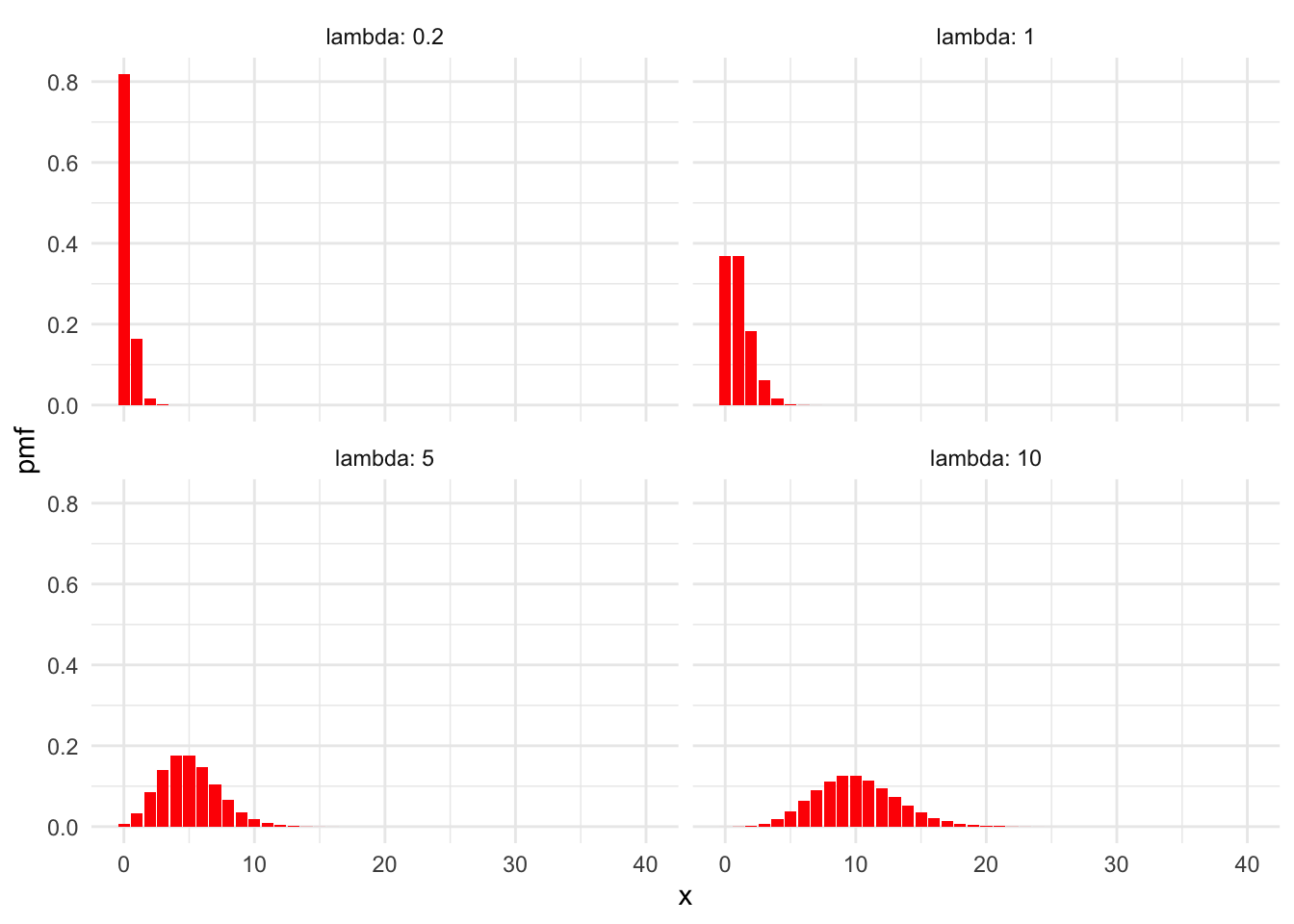

Figure 3.6: Poisson pmfs with various means.

Figure 3.6 shows plots of Poisson pmfs with various means \(\lambda\). Observe in Figure 3.6 that larger values of \(\lambda\) correspond to more spread-out distributions, since the standard deviation of a Poisson rv is \(\sqrt{\lambda}\). Also, for larger values of \(\lambda\), the Poisson distribution becomes approximately normal.

The functions dpois, ppois and rpois are available for working with a Poisson random variable \(X \sim \text{Pois}(\lambda)\):

dpois(x,lambda)is the pmf, and gives \(P(X = x)\).ppois(x,lambda)gives \(P(X \leq x)\).rpois(N,lambda)simulates \(N\) random values of \(X\).

The Taurids meteor shower is visible on clear nights in the fall and can have visible meteor rates around five per hour. What is the probability that a viewer will observe exactly eight meteors in two hours?

We let \(X\) be the number of observed meteors in two hours, and model \(X \sim \text{Pois}(10)\), since we expect \(\lambda = 10\) meteors in our two-hour time period. Computing exactly, \[ P(X = 8) = \frac{10^8}{8!}e^{-10} \approx 0.1126.\]

Using R, and the pmf dpois:

dpois(8, 10)## [1] 0.112599Or, by simulation with rpois:

meteors <- rpois(10000, 10)

mean(meteors == 8)## [1] 0.1118We find that the probability of seeing exactly eight meteors is about 0.11.

Suppose a typist makes typos at a rate of 3 typos per 10 pages. What is the probability that they will make at most one typo on a five-page document?

We let \(X\) be the number of typos in a five-page document. Assume that typos follow the properties of a Poisson rv. It is not clear that they follow it exactly. For example, if the typist has just made a mistake, it is possible that their fingers are no longer on home position, which means another mistake is likely soon after. This would violate the independence property (2) of a Poisson process. Nevertheless, modeling \(X\) as a Poisson rv is reasonable.

The rate at which typos occur per five pages is 1.5, so we use \(\lambda = 1.5\). Then we can compute

\(P(X \leq 1) =\) ppois(1,1.5) \(=0.5578\). The typist has a 55.78% chance of making at most one typo in a five-page document.

3.6.2 Negative binomial

Suppose you repeatedly roll a fair die. What is the probability of getting exactly 14 non-sixes before getting your second six?

As you can see, this is an example of repeated Bernoulli trials with \(p = 1/6\), but it isn’t exactly geometric because we are waiting for the second success. This is an example of a negative binomial random variable.

Suppose that we observe a sequence of Bernoulli trials with probability of success \(p\). If \(X\) denotes the number of failures before the \(n\)th success, then \(X\) is a negative binomial random variable with parameters \(n\) and \(p\). The probability mass function of \(X\) is given by

\[ p(x) = \binom{x + n - 1}{x} p^n (1 - p)^x, \qquad x = 0,1,2\ldots \]

The mean of a negative binomial is \(n p/(1 - p)\), and the variance is \(n p /(1 - p)^2\). The root R function to use with negative binomials is nbinom, so dnbinom is how we can compute probabilities in R. The function dnbinom uses prob for \(p\) and size for \(n\) in our formula.

Suppose you repeatedly roll a fair die. What is the probability of getting exactly 14 non-sixes before getting your second six?

dnbinom(x = 14, size = 2, prob = 1 / 6)## [1] 0.03245274Note that when size = 1, negative binomial is exactly a geometric random variable, e.g.,

dnbinom(x = 14, size = 1, prob = 1 / 6)## [1] 0.01298109dgeom(14, prob = 1 / 6)## [1] 0.012981093.6.3 Hypergeometric

Consider the experiment which consists of sampling without replacement from a population that is partitioned into two subgroups – one subgroup is labeled a “success,” and the other subgroup is labeled a “failure.” The random variable that counts the number of successes in the sample is an example of a hypergeometric random variable.

To make things concrete, we suppose that we have \(m\) successes and \(n\) failures. We take a sample of size \(k\) (without replacement) and we let \(X\) denote the number of successes. Then \[ P(X = x) = \frac{\binom{m}{x} {\binom{n}{k - x}} }{\binom{m + n}{k}} \] We also have \[ E[X] = k \left(\frac{m}{m + n}\right) \] which is easy to remember because \(k\) is the number of samples taken, and the probability of a success on any one sample (with no knowledge of the other samples) is \(\frac {m}{m + n}\). The variance is similar to the variance of a binomial as well, \[ V(X) = k \cdot \frac{m}{m+n} \cdot \frac{n}{m+n} \cdot \frac{m + n - k}{m + n - 1} \] but we have the “fudge factor” of \(\frac {m + n - k}{m + n - 1}\), which means the variance of a hypergeometric is less than that of a binomial. In particular, when \(m + n = k\), the variance of \(X\) is 0. Why?

When \(m + n\) is much larger than \(k\), we will approximate a hypergeometric random variable with a binomial random variable with parameters \(n = m + n\) and \(p = \frac {m}{m + n}\). Finally, the R root for hypergeometric computations is hyper. In particular, we have the following example:

15 US citizens and 20 non-US citizens pass through a security line at an airport. Ten are randomly selected for further screening. What is the probability that 2 or fewer of the selected passengers are US citizens?

In this case, \(m = 15\), \(n = 20\), and \(k = 10\). We are looking for \(P(X \le 2)\), so

sum(dhyper(x = 0:2, m = 15, n = 20, k = 10))## [1] 0.08677992You can find a summary of the discrete random variables together with their R commands in Section 4.6.

Vignette: Loops in R

Iteration is the process of repeating a task to produce a result.

In many traditional computer programming languages, iteration is done explicitly with a structure called a loop.

With R, it is generally preferable and faster to use vector operations or implicit loops such as replicate.

The purrr package also provides powerful tools for iterating.

However, sometimes it is easiest just to write a conventional loop, and R does provide explicit looping constructs.

This vignette explains how to use while loops in the context of simulations.

A while loop repeats one or more statements until a certain condition is no longer met.

It is crucial when writing a while loop that you ensure that the condition to exit the loop will eventually be satisfied! The format looks like this.

i <- 0 # initialize

while (i < 2) { # check condition

print(i)

i <- i + 1 # increment

}## [1] 0

## [1] 1R sets i to 0 and then checks whether i < 2 (it is). It then prints i and adds 1 to i.

It checks again – is i < 2? Yes, so it prints i and adds 1 to it.

At this point i is not less than 2, so R exits the loop.

As a more realistic example of while loops in action, suppose we want to estimate the expected value of a

geometric random variable \(X\).

Example 3.13 simulated tossing a fair coin until heads occurs, and counting the number of tails. In this case, \(X\) is geometric with \(p=0.5\) and so has \(E[X] = 1\). The approach was to simulate 100 coin flips and assume that heads would appear before we ran out of flips. The probability of flipping 100 tails in a row is negligible, so 100 flips was enough.

To simulate a geometric rv where \(p\) is small and unknown, it may take a large number of trials before observing the first success.

If each Bernoulli trial is actually a complicated calculation, we want to stop as soon as the first success occurs.

Under these circumstances, it is better to use a while loop.

Here is a simulation of flipping a coin until heads is observed, using a while loop.

In each iteration of the loop, we flip the coin with sample, print the result, and then add one to the count num_tails.

num_tails <- 0

coin_toss <- ""

while (coin_toss != "Head") {

coin_toss <- sample(c("Head", "Tail"), 1)

print(coin_toss)

if (coin_toss == "Tail") {

num_tails <- num_tails + 1

}

}## [1] "Tail"

## [1] "Tail"

## [1] "Head"num_tails## [1] 2This gives one simulated value of \(X\). In this case we found \(X = 2\), because two tails appeared before the first head. To estimate \(E[X]\),

replicate the experiment.

sim_data <- replicate(10000, {

num_tails <- 0

coin_toss <- ""

while(coin_toss != "Head") {

coin_toss <- sample(c("Head", "Tail"), 1)

if(coin_toss == "Tail") {

num_tails <- num_tails + 1

}

}

num_tails

})

mean(sim_data)## [1] 0.9847The mean of our simulated data is 0.9847, which agrees with the theoretical value \(E[X] = 1\).

Exercises

Exercises 3.1 – 3.3 require material through Section 3.1.

Let \(X\) be a discrete random variable with probability mass function given by \[ p(x) = \begin{cases} 1/4 & x = 0 \\ 1/2 & x = 1\\ 1/8 & x = 2\\ 1/8 & x = 3 \end{cases} \]

- Verify that \(p\) is a valid probability mass function.

- Find \(P(X \ge 2)\).

- Find \(P(X \ge 2\ |\ X \ge 1)\).

- Find \(P(X \ge 2 \cup X \ge 1)\).

Let \(X\) be a discrete random variable with probability mass function given by \[ p(x) = \begin{cases} 1/4 & x = 0 \\ 1/2 & x = 1\\ 1/8 & x = 2\\ 1/8 & x = 3 \end{cases} \]

- Use

sampleto create a sample of size 10,000 from \(X\) and estimate \(P(X = 1)\) from your sample. Your result should be close to 1/2. - Use

tableon your sample from part (a) to estimate the pmf of \(X\) from your sample. Your result should be similar to the pmf given in the problem.

Let \(X\) be a discrete random variable with probability mass function given by \[ p(x) = \begin{cases} C/4 & x = 0 \\ C/2 & x = 1\\ C & x = 2\\ 0 & \mathrm {otherwise} \end{cases} \]

Find the value of \(C\) that makes \(p\) a valid probability mass function.

Exercises 3.4 – 3.15 require material through Section 3.2.

Give an example of a probability mass function \(p\) whose associated random variable has mean 0.

Find the mean of the random variable \(X\) given in Exercise 3.1.

Let \(X\) be a random variable with pmf given by \(p(x) = 1/10\) for \(x = 1, \ldots, 10\) and \(p(x) = 0\) for all other values of \(x\). Find \(E[X]\) and confirm your answer using a simulation.

Suppose you roll two ordinary dice. Calculate the expected value of their product.

Suppose that a hat contains slips of papers containing the numbers 1, 2, and 3. Two slips of paper are drawn without replacement. Calculate the expected value of the product of the numbers on the slips of paper.

Pick an integer from 0 to 999 with all possible numbers equally likely. What is the expected number of digits in your number?

In the summer of 2020, the U.S. was considering pooled testing of COVID-19.20 This problem explores the math behind pooled testing. Since the availability of tests is limited, the testing center proposes the following pooled testing technique:

- Two samples are randomly selected and combined. The combined sample is tested.

- If the combined sample tests negative, then both people are assumed negative.

- If the combined sample tests positive, then both people need to be retested for the disease.

Suppose in a certain population, 5% of the people being tested for COVID-19 actually have COVID-19. Let \(X\) be the total number of tests that are run in order to test two randomly selected people.

- What is the pmf of \(X\)?

- What is the expected value of \(X\)?

- Repeat the above, but imagine that three samples are combined, and let \(Y\) be the total number of tests that are run in order to test three randomly selected people. If the pooled test is positive, then all three people need to be retested individually.

- If your only concern is to minimize the expected number of tests given to the population, which technique would you recommend?

A roulette wheel has 38 slots and a ball that rolls until it falls into one of the slots, all of which are equally likely. Eighteen slots are black numbers, eighteen are red numbers, and two are green zeros. If you bet on “red,” and the ball lands in a red slot, the casino pays you your bet; otherwise, the casino wins your bet.

- What is the expected value of a $1 bet on red?

- Suppose you bet $1 on red, and if you win you “let it ride” and bet $2 on red. What is the expected value of this plan?

One (questionable) roulette strategy is called bet doubling: You bet $1 on red, and if you win, you pocket the $1. If you lose, you double your bet so you are now betting $2 on red, but have lost $1. If you win, you win $2 for a $1 profit, which you pocket. If you lose again, you double your bet to $4 (having already lost $3). Again, if you win, you have $1 profit, and if you lose, you double your bet again. This guarantees you will win $1, unless you run out of money to keep doubling your bet.

- Say you start with a bankroll of $127. How many bets can you lose in a row without losing your bankroll?

- If you have a $127 bankroll, what is the probability that bet doubling wins you $1?

- What is the expected value of the bet doubling strategy with a $127 bankroll?

- If you play the bet doubling strategy with a $127 bankroll, how many times can you expect to play before you lose your bankroll?

Flip a fair coin 10 times and let \(X\) be the proportion of times that a head is followed by another head. Discard the sequence of ten tosses if you don’t obtain a head in the first nine tosses. What is the expected value of \(X\)? (Note: this is not asking you to estimate the conditional probability of getting heads given that you just obtained heads.)

See Miller and Sanjurjo21 for a connection to the “hot hand” fallacy.

To play the Missouri lottery Pick 3, you choose three digits 0-9 in order. Later, the lottery selects three digits at random, and you win if your choices match the lottery values in some way. Here are some possible bets you can play:

- $1 Straight wins if you correctly guess all three digits, in order. It pays $600.

- $1 Front Pair wins if you correctly guess the first two digits. It pays $60.

- $1 Back Pair wins if you correctly guess the last two digits. It pays $60.

- $6 6-Way Combo wins if the three digits are different and you guess all three in any order. It pays $600.

- $3 3-Way Combo wins if two of the three digits are the same, and you guess all three in any order. It pays $600.

- $1 1-Off lets you win if some of your digits are off by 1 (9 and 0 are considered to be one off from each other). If you get the number exactly correct, you win $300. If you have one digit off by 1, you win $29. If you have two digits off by 1, you win $4, and if all three of your digits are off by 1 you win $9.

Consider the value of your bet to be your expected winnings per dollar bet. What value do each of these bets have?

Let \(k\) be a positive integer and let \(X\) be a random variable with pmf given by \(p(x) = 1/k\) for \(x = 1, \ldots, k\) and \(p(x) = 0\) for all other values of \(x\). Find \(E[X]\).

Exercises 3.16 – 3.20 require material through Section 3.3.

Suppose you take a 20-question multiple choice test, where each question has four choices. You guess randomly on each question.

- What is your expected score?

- What is the probability you get 10 or more questions correct?

Steph Curry is a 91% free-throw shooter. Suppose that he shoots 10 free throws in a game.

- What is his expected number of shots made?

- What is the probability that he makes at least eight free throws?

Steph Curry is a 91% free-throw shooter. He decides to shoot free throws until his first miss. What is the probability that he shoots exactly 20 free throws (including the one he misses)?

In October 2020, the YouTuber called “Dream” posted a speedrun of Minecraft and was accused of cheating.

In Minecraft, when you trade with a piglin, the piglin gives you an ender pearl 4.7% of the time. Dream got 42 ender pearls after 262 trades with piglin.

- If you trade 262 times, what is the expected number of ender pearls you receive?

- What is the probability of getting 42 or more ender pearls after 262 trades?

When you kill a blaze, you have a 50% chance of getting a blaze rod. Dream got 211 blaze rods after killing 305 blazes.

- If you kill 305 blazes, what is the expected number of blaze rods you receive?

- What is the probability of getting 211 or more blaze rods after killing 305 blazes?

- Do you think Dream was cheating?

Let \(X \sim \text{Geom}(p)\) be a geometric rv with success probability \(p\). Show that the standard deviation of \(X\) is \(\frac{\sqrt{1-p}}{p}\). Hint: Follow the proof of Theorem 3.6, but take the derivative twice with respect to \(q\). This will compute \(E[X(X-1)]\). Use \(E[X(X-1)] = E[X^2] - E[X]\) and Theorem 3.9 to finish.

Exercises 3.21 – 3.22 require material through Section 3.4.

Let \(X\) be a discrete random variable with probability mass function given by \[ p(x) = \begin{cases} 1/4 & x = 0 \\ 1/2 & x = 1\\ 1/8 & x = 2\\ 1/8 & x = 3 \end{cases} \]

- Find the pmf of \(Y = X - 1\).

- Find the pmf of \(U = X^2\).

- Find the pmf of \(V = (X - 1)^2\).

Let \(X\) and \(Y\) be random variables such that \(E[X] = 2\) and \(E[Y] = 3\).

- Find \(E[4X + 5Y]\).

- Find \(E[4X - 5Y + 2]\).

Exercises 3.23 – 3.32 require material through Section 3.5.

Find the variance and standard deviation of the rv \(X\) from Exercise 3.1.

Roll two ordinary dice and let \(Y\) be their sum.

- Compute the pmf for \(Y\) exactly.

- Compute the mean and standard deviation of \(Y\).

- Check that the variance of \(Y\) is twice the variance for the roll of one die (see Example 3.32).

Let \(X\) be a random variable such that \(E[X] = 2\) and \(\text{Var}(X) = 9\).

- Find \(E[X^2]\).

- Find \(E[(2X - 1)^2]\).

- Find \(\text{Var}(2X - 1)\).

Let \(X\) and \(Y\) be random variables. Show that the covariance of \(X\) and \(Y\) can be rewritten in the following way: \[ E[XY] - E[X]E[Y] = E\left[(X - \mu_X)(Y - \mu_Y)\right]. \]

Let \(X \sim \text{Binom}(100,0.2)\) and \(Y \sim \text{Binom}(40,0.5)\) be independent rvs.

- Compute \({\rm Var}(X)\) and \({\rm Var}(Y)\) exactly.

- Simulate the random variable \(X + Y\) and compute its variance. Check that it is equal to \({\rm Var}(X) + {\rm Var}(Y)\).

In an experiment where you toss a fair coin twice, let:

- \(X\) be 1 if the first toss is heads and 0 otherwise.

- \(Y\) be 1 if the second toss is heads and 0 otherwise.

- \(Z\) be 1 if both tosses are the same and 0 otherwise.

Show that \(X\) and \(Y\) are independent. Show that \(X\) and \(Z\) are independent. Show that \(Y\) and \(Z\) are independent. Finally, show that \(X\), \(Y\), and \(Z\) are not mutually independent.

Let \(X\) be a random variable with mean \(\mu\) and standard deviation \(\sigma\). Find the mean and standard deviation of \(\frac{X - \mu}{\sigma}\).

Suppose that 55% of voters support Proposition A.

- You poll 200 voters. What is the expected number that support the measure?

- What is the margin of error for your poll (two standard deviations)?

- What is the probability that your poll claims that Proposition A will fail?

- How large a poll would you need to reduce your margin of error to 2%?

Suppose 27 people write their names down on slips of paper and put them in a hat. Each person then draws one name from the hat. Estimate the expected value and standard deviation of the number of people who draw their own name. (Assume no two people have the same name!)

In an experiment22 to test whether participants have absolute pitch, scientists play notes and the participants say which of the 12 notes is being played. The participant gets 1 point for each note that is correctly identified, and 3/4 of a point for each note that is off by a half-step. (Note that if the possible guesses are 1:12, then the difference between 1 and 12 is a half-step, as is the difference between any two values that are 1 apart.)

- If the participant hears 36 notes and randomly guesses each time, what is the expected score of the participant?

- If the participant hears 36 notes and randomly guesses each time, what is the standard deviation of the score of the participant? Assume each guess is independent.

Exercises 3.33 – 3.39 require material through Section 3.6.

Let \(X\) be a Poisson rv with mean 3.9.

- Create a plot of the pmf of \(X\).

- What is the most likely outcome of \(X\)?

- Find \(a\) such that \(P(a \le X \le a + 1)\) is maximized.

- Find \(b\) such that \(P(b \le X \le b + 2)\) is maximized.

We stated in the text that a Poisson random variable \(X\) with rate \(\lambda\) is approximately a Binomial random variable \(Y\) with \(n\) trials and probability of success \(\lambda/n\) when \(n\) is large. Suppose \(\lambda = 2\) and \(n = 300\). What is the largest absolute value of the difference between \(P(X = x)\) and \(P(Y = x)\)?

The charge \(e\) on one electron is too small to measure. However, one can make measurements of the current \(I\) passing through a detector. If \(N\) is the number of electrons passing through the detector in one second, then \(I = eN\). Assume \(N\) is Poisson. Show that the charge on one electron is given by \(\frac{{\rm Var}(I)}{E[I]}\).

As stated in the text, the pdf of a Poisson random variable \(X \sim \text{Pois}(\lambda)\) is \[ p(x) = \frac 1{x!} \lambda^x e^{-\lambda}, \quad x = 0,1,\ldots \]

Prove the following:

- \(p\) is a pdf. (You need to show that \(\sum_{x=0}^{\infty} p(x) = 1\).)

- \(E(X) = \lambda.\) (Show that \(\sum_{x=0}^{\infty} xp(x) = \lambda\).)

- \(\text{Var}(X) = \lambda.\) (Compute \(E[X(X-1)]\) by summing the infinite series \(\sum_{x=0}^{\infty} x(x-1)p(x)\). Use \(E[X(X-1)] = E[X^2] - E[X]\) and Theorem 3.9 to finish.)

Let \(X\) be a negative binomial random variable with probability of success \(p\) and size \(n\), so that we are counting the number of failures before the \(n\)th success occurs.

- Argue that \(X = \sum_{i = 1}^n X_i\) where \(X_i\) are independent geometric random variables with probability of success \(p\).

- Use part (a) to show that \(E[X] = \frac{np}{1 - p}\) and the variance of \(X\) is \(\frac{np}{(1 - p)^2}\).

Show that if \(X\) is a Poisson random variable with mean \(\lambda\), and \(Y\) is a negative binomial random variable with \(E[Y] = \frac{np}{1 - p} = \lambda\), then \(\text{Var}(X) \le \text{Var}(Y)\).

In the game of Scrabble, players make words using letter tiles, see Exercise 2.19. The tiles consist of 42 vowels and 58 non-vowels (including blanks).

- If a player draws 7 tiles (without replacement), what is the probability of getting 7 vowels?

- If a player draws 7 tiles (without replacement), what is the probability of 2 or fewer vowels?

- What is the expected number of vowels drawn when drawing 7 tiles?

- What is the standard deviation of the number of vowels drawn when drawing 7 tiles?

Deathrolling in World of Warcraft works as follows. Player 1 tosses a 1000-sided die. Say they get \(x_1\). Then player 2 tosses a die with \(x_1\) sides on it. Say they get \(x_2\). Player 1 tosses a die with \(x_2\) sides on it. The player who loses is the player who first rolls a 1.

- Estimate the expected total number of rolls before a player loses.

- Estimate the probability mass function of the total number of rolls.

- Estimate the probability that player 1 wins.

In this text, we almost exclusively consider discrete random variables with integer values, but more generally a discrete rv could take values in any subset of \(\mathbb{R}\) consisting of countably many points.↩︎

Jean-Michel Gaillard et al., “One Size Fits All: Eurasian Lynx Females Share a Common Optimal Litter Size,” The Journal of Animal Ecology 83 (July 2013), https://doi.org/10.1111/1365-2656.12110.↩︎

Using the sample size as a constant in

sampleand in the computation below can lead to errors if you change the value in one place but not the other. We will see in Chapter 5 thatproportions(table(X))is a better way to estimate probability mass functions from a sample.↩︎Amos Tversky and Thomas Gilovich, “The Cold Facts about the ‘Hot Hand’ in Basketball,” CHANCE 2, no. 1 (1989): 16–21, https://doi.org/10.1080/09332480.1989.11882320.↩︎

The careful reader will notice that this is an example of a discrete random variable that does not take integer values.↩︎

That is, the variance is greater than or equal to zero.↩︎

P W Cunningham, “The Health 202: The Trump Administration Is Eyeing a New Testing Strategy for Coronavirus, Anthony Fauci Says,” Washington Post, June 26, 2020.↩︎

Joshua B Miller and Adam Sanjurjo, “A Cold Shower for the Hot Hand Fallacy: Robust Evidence That Belief in the Hot Hand Is Justified,” IGIER Working Paper, no. 518 (August 2019), https://doi.org/10.2139/ssrn.2450479.↩︎

E Alexandra Athos et al., “Dichotomy and Perceptual Distortions in Absolute Pitch Ability,” Proceedings of the National Academy of Sciences of the United States of America 104, no. 37 (2007): 14795–800, https://doi.org/10.1073/pnas.0703868104.↩︎